OpenRoboCare

Abstract

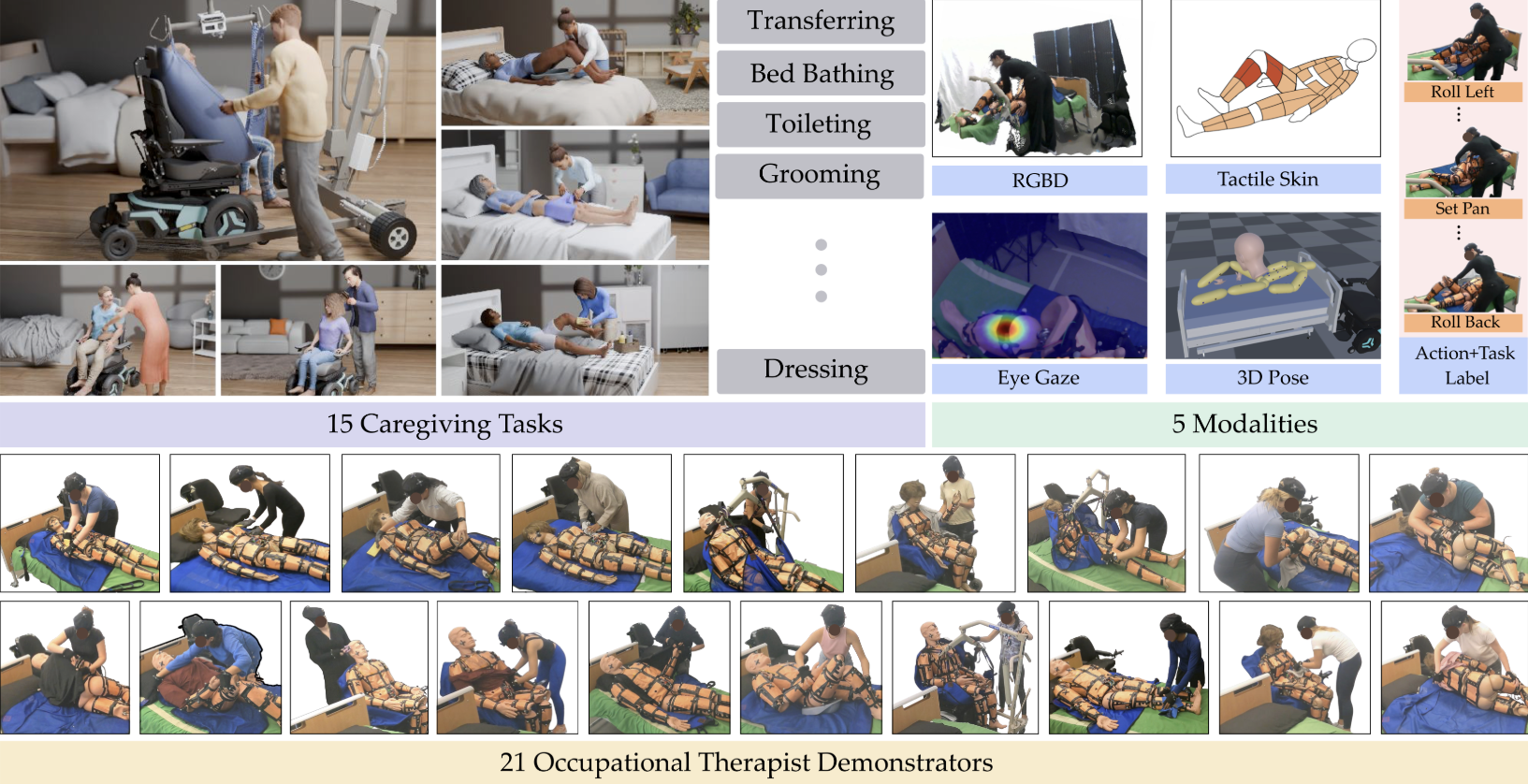

We present OpenRoboCare, a multi-modal dataset for robot-assisted caregiving, capturing expert occupational therapist demonstrations of Activities of Daily Living (ADLs).

Caregiving tasks involve complex physical human-robot interactions, requiring precise perception under occlusions, safe physical contact, and long-horizon planning. While recent advances in robot learning from demonstrations have shown promise, there is a lack of a large-scale, diverse, and expert-driven dataset that captures real-world caregiving routines.

To address this gap, we collected data from 21 occupational therapists performing 15 ADL tasks on two manikins. The dataset spans five modalities — RGBD video, pose tracking, eye-gaze tracking, task and action annotations, and tactile sensing, providing rich multi-modal insights into caregiver movement, attention, force application, and task execution strategies. We further analyze expert caregiving principles and strategies, offering insights to improve robot efficiency and task feasibility. Additionally, our evaluations demonstrate that OpenRoboCare presents challenges for state-of-the-art robot perception and human activity recognition methods, both critical for developing safe and adaptive assistive robots, highlighting the value of our contribution.

More Details: https://robo-care.github.io/

Publications:

Xiaoyu Liang, Ziang Liu, Kelvin Lin, Edward Gu, Ruolin Ye, Tam Nguyen, Cynthia Hsu, Zhanxin Wu, XiaomanYang, Christy Sum Yu Cheung, Harold Soh, Katherine Dimitropoulou, Tapomayukh Bhattacharjee. “OpenRoboCare: A Multi-Modal Multi-Task Expert Demonstration Dataset for Robot Caregiving”. In Submission.